Artificial intelligence has recently gained huge momentum. Among such, Generative AI and large language models probably hold two of the most critical terminologies a person may need to understand.

While these two terminologies hold significant importance in current AI systems, the concept is very different in terms of their goals, purposes, and functionalities.

It is relevant that anyone interested in machine learning and more about AI technologies and what impact those could have on such diverse areas as NLP, content creation, language translation, and many others be knowledgeable in the differences that distinguish these different AI from each other.

What Is Generative AI?

Generative AI refers to a class of AI capable of generating new content. That might mean text, images, audio, video, or perhaps even code.

Generative AI refers to a class of AI capable of generating new content. That might mean text, images, audio, video, or perhaps even code.

These models have been trained using enormous datasets with deep learning methods to learn underlying patterns of data.

The objective is to create new content which would be quite indistinguishable from what the human faculty creates in terms of creativity and comprehension.

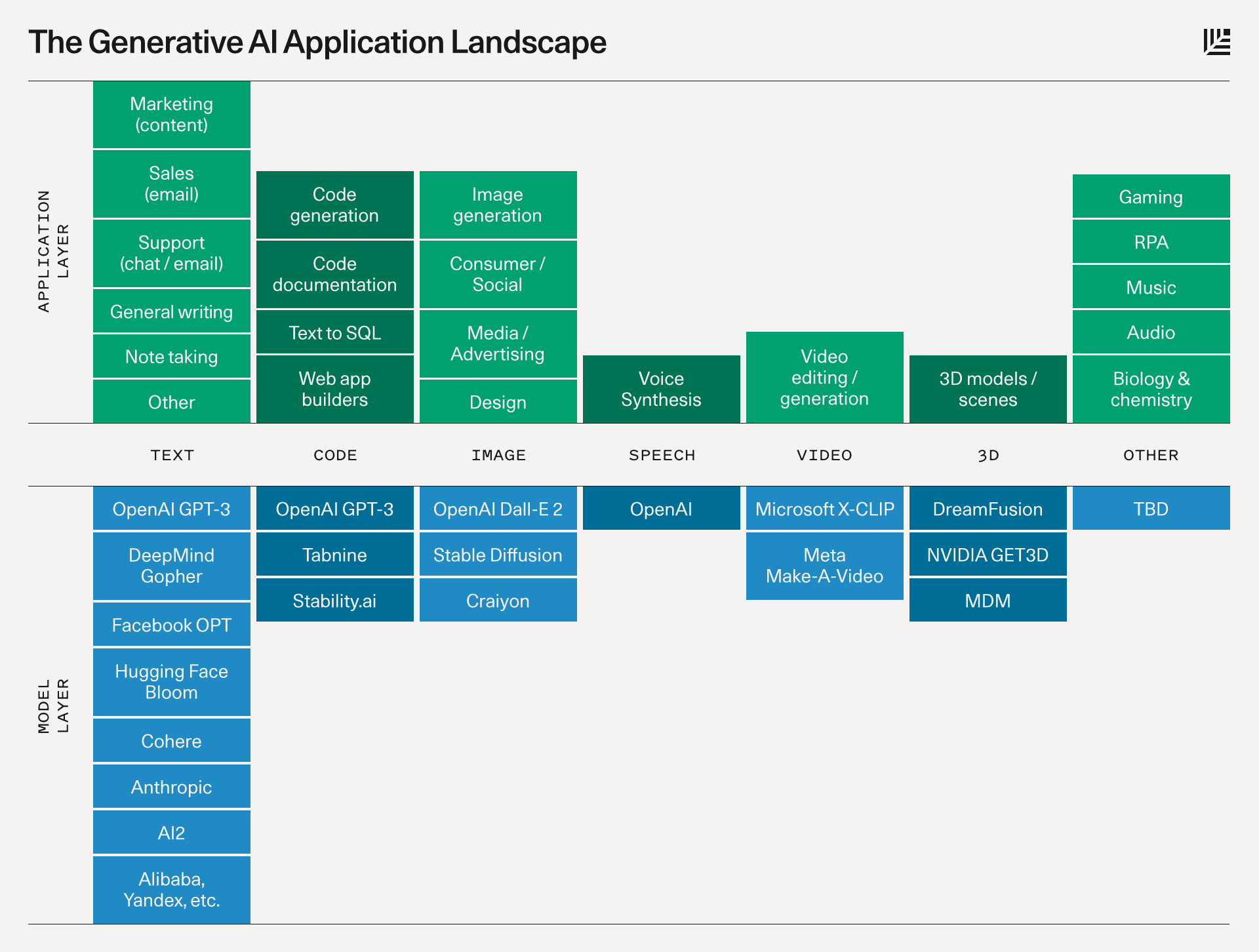

Generative AI includes all classes of tools and models that can create coherent, contextually appropriate text, build images, generate music, or even perform video generation.

One of the major goals of generative AI is to support content creation on multiple media platforms.

These include text generation-related tasks, such as generating text, language generation, or textual analysis, for which generative AI tools are used to provide human-similar text.

Image and media generation are also included in the generative AI sphere by using either a generic diffusion model or GAN in more complicated cases.

What are Large Language Models LLMs?

Large Language Models are a segment of generative AI systems that specialize in the processing and comprehension of natural languages.

These language machines can perform tasks like content summarization, virtual assistance, and language translation because they are built to work with textual data and human languages.

Similar to GPT, LLMs go through a rigorous pre-training phase on text data to gain domain-specific knowledge and identify particular patterns in human language.

The ultimate objectives of LLMs are text generation, question answering, language translation, and sentiment analysis, among others.

In light of the proposed tasks focused on text centred jobs and tasks, LLMs are normally trained from various training datasets and with deep learning methods to make them proficient in performance.

While LLMs do occur within the broader generative AI landscape, they specialise in language understanding and natural language generation.

Key Difference Between llm vs generative ai models

Though both Generative AI and LLMs fall into the category of AI models, they also have many differences in scope and applications, as described below.

1. Scope and Applications

- Generative AI:

These systems fall into a larger domain where they generate text, images, music, and videos.

It covers a great variety of applications, including content creation, image generation, code generation utilities, and the production of data.

To give an example, the DALL-E and MidJourney tools can generate images on the basis of a described feature in a text, while others generate audio and video.

- LLMs:

While these models, on the other hand, focus entirely on human languages.

Their major applications include language translation, text generation, summarization of content, among other textual activities.

The models are able to devise continuous and contextually appropriate for extensive text data. Also, they set well in doing textual analysis, natural language understanding, and sentiment analysis.

2. Training Data

- Generative AI:

These generative AI models are trained differently, depending on the work they are intended to do.

For example, generative image models are normally trained with large data on images, while generative music models are normally trained on data related to music.

Moreover, the effectiveness of these models will also depend on the quality of the training data, because more varied and diverse training data will yield better results in any kind of medium.

- LLMs:

Large Language Models: To a large extent, these language models are trained on textual data.

In many cases, the text datasets are huge and generated from books, articles, and the internet. These large language models make use of this wide range of text data to make attempts at achieving language understanding skills.

Training data quality is crucial in ensuring that the language model used can generate human-like text and carry out language-related tasks with high accuracy.

3. Complexity of Tasks

- Generative AI:

are used to perform tasks ranging in simplicity from content creation to highly complex tasks, such as software development or media generation.

In most cases, this entails teaching a generative AI to recognize patterns in the input data. Models like diffusion models or GANs can be used to solve those particular, highly complex tasks. For example, they can be used to generate music or produce incredibly realistic images.

- LLMs:

Although machine translation, textual analysis, and language generation are among the many text-related tasks carried out by LLMs, these tasks are not as varied as those carried out by generative AI, which is capable of multimodality.

The tasks carried out by LLMs are less variable, despite the fact that they may be complex in terms of language understanding.

4. Model Design

- Generative AI:

There are numerous generative models available, each with a distinct function for producing specific content, such as diffusion models, variational autoencoders, and GANs.

These models are focused on various media kinds and content generation requirements, such as utilities for creating images or audio content.

- LLMs:

These are pre-trained models, similar to GPT and BERT. Second, they are made to function with data that is textual.

Therefore, the use of transformers and NLP tasks is the main focus of these models. One type of learning architecture for managing lengthy sequences is called a transformer.

5. Ethics

- Generative AI:

The ethics become much more extensive with generative AI because it generates highly realistic content for many varied kinds of media.

Deepfakes through images, videos, or data create profound ethical questions regarding privacy, misinformation, and consent.

- LLMs:

The creation of harmful text and biased text is another ethical concern with LLMs, though its concerns are usually more linguistic in nature.

Having said that, it will be crucial to uphold particular ethical standards pertaining to problems like bias and disinformation as long as LLMs continue to expand and change.

How Generative AI and LLMs Complement Each Other

While there are many ways in which generative AI and large language models are separate, they often complement each other in AI-driven applications.

For example, generative AI tools, such as GPT, are one of the large language models that prove to be very helpful in text creation, code generation tools.

These LLMs are generative AI models that get trained on language data only, using deep learning techniques for producing human-like text.

Moreover, generative AI encompasses a broader range of models than just textual, extending into other forms of media.

For instance, in some applications related to language translation or analysis, LLMs could be combined with generative AI models previously specific to other tasks, such as the generation of images or audio.

In this fashion, AI can also deal with complex, multimodal tasks that involve both textual and visual information.

Generative AI within the AI Landscape

Generative AI is the fulcrum of development in AI technologies and their future.

The ability to generate content in multiple forms of media makes it a critical component in industries ranging from marketing and entertainment to development.

Generative AI models can generate images, videos, music data and synthetic data, and they can also help in solving very complex tasks, such as drug discovery and design.

This growth in sophistication has given takers to AI systems that generate content much closer to human creativity, thanks in great part to deep learning.

The latest breakthroughs in the field have made AI capable of emulating human creativity to the extent that even highly advanced algorithms, such as ChatGPT, can create text flows that read coherent, with contextually relevant text appropriate, and practically indistinguishable from human writing.

Additionally, generative AI is making its way into more specialized fields. In code generation, for example, models are used to assist developers in writing more effective code.

Generative AI models have been useful in the development of tools for audio generation, content summarization, and virtual assistants.

Large Language Models in the AI Landscape

Large language models are, in fact, a very important sector of generative AI.

This, of course, includes large industries related to the amounts of text, from customer service to a study of legal documents and academic research.

The applications of LLMs cover a wide range-from developing chatbots and virtual assistants that help with streamlining communication and making sentiment analyses to giving assistive help with natural language understanding.

They also play a very important role in translation and summarizing text, thus making them highly useful applications requiring fidelity in language processing.

Large language models have unleashed a new era for businesses to enrich customer experiences and automate processes, thereby saving time spent on other large language model meta and-related activities.

The Future of AI: LLMs and generative AI models

As this integration keeps going, with large language models keeping on evolving, the end product is likely to provide a far stronger AI capability capable of multisensory tasks.

For example, future AI models might combine the generation capability of LLMs with the image generation capability of models or GANs to either create images or fully new forms of content.

In addition, this will lead to the development of llms vs generative ai since learning techniques and data availabilities improve.

Different training data application results in improved understanding by AI systems with respect to human language, content generation, and different multimedia tasks.

Conclusion

In the end, each plays a different role in the landscape of AI: generative AI and large language models complement each other in some powerful ways. Understanding their distinction is important, but also important are their shared goals for those interested in the future of AI technologies.

Together, these technologies are not only changing entire sectors of the economy; they open the door for increasingly complex AI models that push the limits of what's possible with artificial and artificial general intelligence.

Frequently asked questions (FAQs)

1. How does data availability impact the performance of AI models?

Data availability plays a crucial role in the performance of AI models. The more diverse and extensive the data, the better the model can learn and generalize from different scenarios.

This is especially important for pre-trained models, which rely on large datasets during training to ensure they can handle a wide range of tasks effectively.

2. How is task complexity handled by different AI models?

Task complexity varies significantly across AI models. Simple tasks like text classification require less computational power, while more complex tasks like image generation or natural language understanding involve advanced models. Especially pre-trained models, which are already exposed to a broad range of data, are better equipped to handle tasks of varying complexities.

3. What role do pre-trained models play in reducing development time?

Pre-trained models are instrumental in reducing development time because they come with prior knowledge, having been trained on massive datasets.

Instead of building a model from scratch, developers can fine-tune these pre-trained models for specific tasks, saving both time and computational resources. This is especially useful when addressing tasks with high complexity.