AI Chat Generator

Introduction

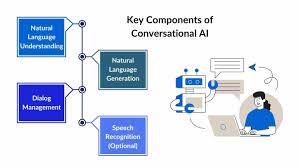

Definition of AI Chat Generator

AI chat generator is a type of system that AI develops in order to develop and generate text--based responses in real time, mimicking human-like conversations. These systems are usually called chatbots, with more advanced algorithms and machine learning models for understanding and creating responses based on conversation context.

Importance of Conversational Agents in various Applications

AI chat generators are crucial tools in modern-day applications as they cut across industries, including customer services, health care, education, entertainment, and e-commerce.

They can perform a task like answering frequently asked questions, handling customer complaints, offering free virtual assistance, and even giving therapeutic conversations.

Their value lies in the saving of human effort, high user engagement, and efficient 24/7 support.

Types of Chat Generators

There are three major kinds of chat generation systems:

- Rule-Based Systems:

These systems are based upon predefined rules and patterns. They are useful for simple conversations. They fail however in heavy, complex conversations.

- Retrieval-Based Systems:

They do the search for the most relevant answer or response from a database of predefined answers. They work best for structured queries.

- Generative Models:

The most advanced ones, it creates novel, context-related text online - employing types of models such as sequence-to-sequence and transformer-based architectures such as GPT.

Classical Techniques for Chat Generation

-Rule-Based Systems

Rule ai chatbot-based chatbots are rule-based systems that follow definite scripts to communicate with the users. Developers create the ai a set of "if-then" conditions and the bot delivers responses depending on how the user input matches the specific language patterns used.

Limitations and Challenges

The main weakness of rule-based systems is that they are very inflexible. They can only respond in the domain defined by predefined rules; thus, they quickly get lost in more subtle conversations. In addition, maintenance of their large numbers of rules becomes cumbersome.

-Retrieval-Based Systems

The retrieval-based systems do not generate new text but rather draw from a collection of already existing responses. Whenever the system receives a user input, the system uses several algorithms to locate the next text generator and best possible response from a repository of available answers.

Methods for Retrieval of Suitable Responses

Retrieval-based models rely on similarity matching techniques like TF-IDF, or vector-based models such as Word2Vec and BERT, in order to rank the possible responses. Though they excel in achieving higher accuracy than a rule-based system, their ability is bounded by their static nature of response database.

Generative Models for Chat Generation

-Sequence-to-Sequence Models

It is derived from recurrent neural networks and is utilized for mapping an input sequence, say, a user's question, to an output sequence in this case being the text generator the response given by the chatbot.

Overview of Sequence-to-Sequence Architecture

The architecture of a Seq2Seq model is composed of two parts: an encoder and a decoder. The encoder function processes key information from the input and encodes it into a fixed-size vector, which then goes to the decoder to make an output sequence.

Adaptation for Chat Generation Tasks

Such models have been applied in applications such as machine translation but can be fine-tuned for chat generation by training them on the next sentence in a conversation given the previous one.

Even though these models offer exciting advantages, they are prone to losing contextual information in larger conversations.

-Transformers

The advent of Transformers marked a drastic leap within the domains of natural language processing (NLP) by bypassing the problems of sequential processing that plagued RNNs.

Introduction to Transformer Architecture

A mechanism known as self-attention is a tool used to process words simultaneously in the Transformer architecture instead of one at a time.

This allows the model to make relationships between all words in a sentence much better than other alternative models, therefore, making it much more effective tool for context understanding.

Applications of Transformers in Chat Generation

Transformers are the basis of many sophisticated chat models. They can be shaped to process humongous amounts of data and produce far better, more contextual responses.

Transforms power some of the most advanced and complex chat systems that exist today.

-Generative Pre-Trained Transformers GPT Models

GPT (Generative Pre-trained Transformer) is a feat family of generative chat systems developed by OpenAI; these systems have actually been through pre-training on a big chunk of text data and thus learned to generate contextually relevant and coherent text responses.

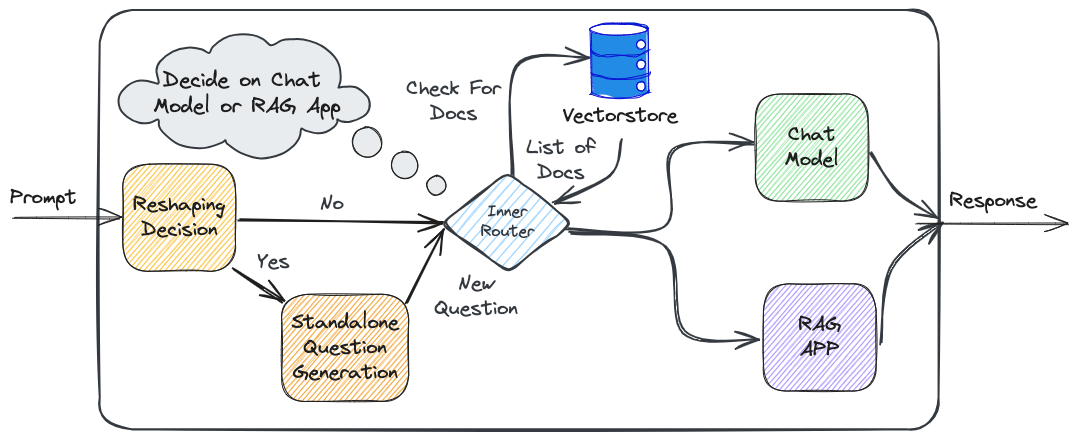

Explanation of GPT Architecture

GPT is based on the Transformer architecture and pre-trained with larger datasets produce text before fine-tuning for specific tasks.

So in reality, the training is just word-prediction of the next word in the sequence produce text, which it will do naturally when it receives a prompt to generate human-like text.

Benefits of engaging the GPT while generating coherent and contextually relevant responses.

GPT models can provide high-quality, fluency conversations over an extensive range of topics. They can better understand context, provide more creative and diversified responses, and also require fewer manual interventions than previous models like Seq2Seq.

Training and Fine-Tuning Chat Generators

-Data Collection and Preprocessing

Good-quality training data is critical for the performance of an effective chatbot. For developing the ability to cope with diverse dialogue contexts, chat models need to be exposed to a wide range of diverse conversations.

-Data Preprocessing for Generation of Conversational AI Model

Data preprocessing is the process of writing something that will tokenize character in the text, erase irrelevant characters, handle special tokens, and organize conversations of text into paired data to be prepared for training

The AI chat generator is trained on huge sets of data in a conversational flow, thereby allowing it to develop a pattern of language and the patterns of conversation in other channels.

-Training Techniques of Conversational AI Model Generation

Usually, chat models rely on supervised learning, where they learn from labeled input-output pairs, and reinforcement learning, which allows the model to improve through trial and error.

Challenges in Convergence and Optimization of the Model

Reachability of optimal model performance would require overfitting on the training data and proving the ability of the model to generalize well toward unseen queries.

Training such large models involves several computational resources and a great deal of time.

Fine-Tuning

They can be fine-tuned to be applied specifically to customer service or healthcare support, to name a few.

How Pre-Trained Models Are Fine-Tuned for Specific Chatbot Applications

Fine-tuning adjusts the parameters of pre-trained models fit onto domain-specific data. For instance, a GPT model that was pre-trained on general text can be fine-tuned on the basis of customer service transcripts to create text that specialize in handling client questions.

Evaluation Metrics for Chat Generators

-Perplexity

It is often a metric used for evaluating language models about the performance of how well a model can predict a sequence of words.

Definition of Perplexity Metric for Language Models

It's measure of uncertainty about the next word to be predicted; the better a model's performance, the lower the value of the perplexity.

How Perplexity Is Used to Evaluate Chat Generation Models

A lower perplexity model is more likely to have coherent responses in chat generation. However, on its own as much detail above, perplexity is not sufficient to evaluate the goodness of conversations.

-Human Evaluation

Human judgment plays a crucial role in evaluating the real-world performance of ai chatbot generators. Role of Human Judgment in Evaluating Chatbot Responses

Human beings measure the relevance, coherence, naturalness, and engagement of the response, which cannot easily be captured by an automated metric.

Methods in Human Evaluations

Human validations can be done for example by surveying the ratings of the chatbot interactions or qualitative validation involving expert reviewers.

Applications of AI Chat Generators

-Customer Support and Service

Usage of chatbots has become common knowledge with respect to websites assisting customers with their questions, solution provision to problems encountered, and information on products. Automated web customer service saves a lot of time and operational cost.

-Virtual Assistants

Topical chat agents are also known as virtual assistants, like Siri and Alexa. Those are really specialized tools that do things such as answering questions, reminding you to buy a certain product, or controlling the intensity of your smart devices.

Educational Chatbots: tutor education, language learning, instant feedback for students. The utility is quite personal because it will work according to the speed of the learner. Entertainment/Gaming

AI chat generators are now a tool largely applied for interactive storytelling and games that create immersive gaming experiences and allow the gamers to interact with in-game characters in a very dynamic way.

Challenges and Limitations

-Understanding Context

Understanding message context over a more extended period of conversation is another primary challenge because current models tend to lose track of details of the previous messages easily and produce disjointed responses.

-Bias and Ethical Concerns

AI chat generators become biased based on unrepresentative or biased datasets used for their training. This means that there is a concern with the caution and ethics in the adoption of chatbots when concerning sensitive information.

-Safety and Security

The threats of malicious use, features such as the creation of harmful content or false information, persist with chatbots. Consequently, security measures will be needed by the developers to restrict such risks.

Recent Advances

-Multimodal Chat Generators

Recent advancements include multimodal chatbots, which can perform processes other than text, such as images, audio, and video; this provides further enrichment in the nature write content of conversations that can be engaged in.

-Interactive and Adaptive Chatbots

The adaptive chatbot learns from user interaction and feedback over time to create content enhance improvements and personalizes conversations based on the preferences of the users.

Future Directions

-Personalized Chatbots

Throughout time, the customized chatbots would become much prevalent by making response according to the user's behaviour, chat and message history, or any preference, thus making interactions much more human-like.

-Emotionally Intelligent Chatbots

Emotional recognition or emotional response might become the feature of point of future chatbots, meaning that such conversations would be emotionally closer, empathizing as well, and would exactly find their place in mental health fields.

Conclusion

Artificial intelligence chat generators are fast approaching their aim of revolutionizing the way humans and machines communicate by providing solutions scalable and efficient across multiple channels and numerous industrial sectors.

They will continue to grow in complexity as research improves these systems, bringing humans and computers closer in this increasingly digitized reality.