Introduction

Facial recognition technology is among the mostly discussed advancements in AI and Machine Learning.

The technology able to identify and determine identities using facial features has witnessed rapid growth in every possible field. It has been applied in social media platforms, security systems, and many more apps today.

The first relatively easy step towards the development of this technology was when Facebook released DeepFace in 2014.

DeepFace proved to be a groundbreaking advancement in the progressiveness and performance shown by facial recognition systems compared to previous benchmarks set in the industry.

This article would explore the ways through which DeepFace drastically changed the landscape of facial recognition technology, its technical foundations, applications, challenges, and future prospects.

What is Facial Recognition Technology?

Definition of Facial Recognition Technology

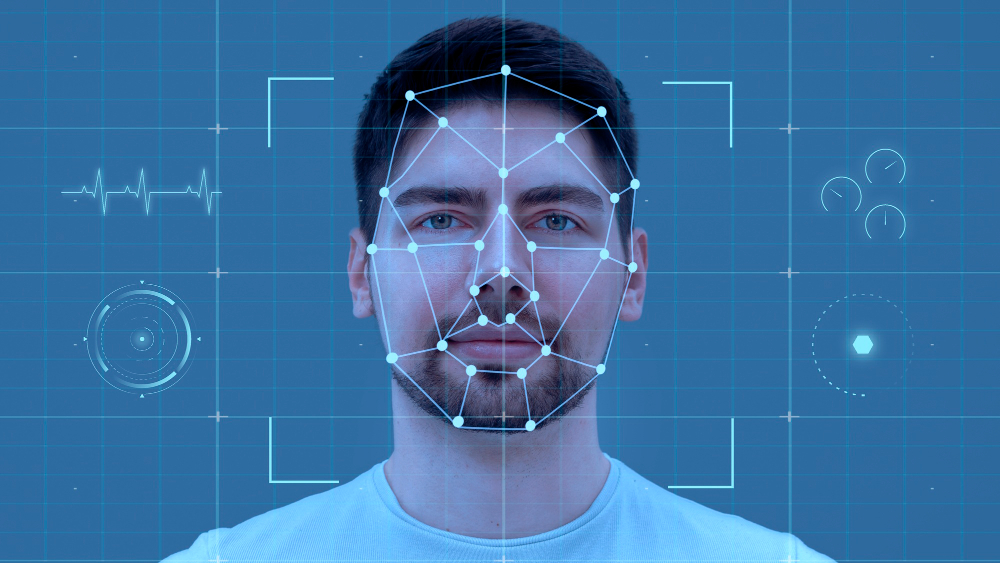

FRT is a biometric system that uses the unique features on an individual's face to authenticate or identify him.

Facial attribute analysis refers algorithms create a facial template after analyzing the distance between eyes, nose shape, and face structure.

Comparing input image and the generated facial template against a database with known faces identifies a match. Usually, these systems feature in applications with respect different external face recognition models libraries due to security and identity verification purposes and personal convenience.

Historical Perspective and Development of Facial Recognition Systems

The first face recognition system was invented in the early 1960s by an artificial intelligence researcher, Woodrow Wilson Bledsoe.

However, the method remained relatively primitive for several decades due to limited computational power.

Real advancements in computing and machine learning algorithms really only began to push facial attributes analysis technology seriously forward in the 2000s.

The bulk of most early systems relied on geometric methods; such approaches inherently focus on the relative positions of features within the face.

Now, deep learning techniques, particularly CNNs, are widely being used for discovering complex patterns and effectively increasing the accuracy of recognition.

The release of DeepFace by the industry at large marked a significant milestone in the state of the art state of the art field.

Introduction to DeepFace

Overview of DeepFace: Development and Launch by Facebook

It was DeepFace that Facebook developed and launched in 2014, an artificial facial recognition system using deep learning. Its performance was greatly improved while automatically identifying faces.

Face recognition through traditional methods had been conducted earlier by Facebook, but this often resulted in errors or inconsistency, mainly when dealing with diverse faces and varying conditions.

This was the challenge that the deep neural networks were created to handle, and they became the game-changer in the facial attribute analysis library industry.

There were millions of labeled images within Facebook's vast database used to train the system with great accuracy in face recognition and labeling.

DeepFace itself was a product of Facebook's wider effort to make its site a better place for users, particularly as far as photo management and aspects of content sharing are concerned.

Technical Foundation of DeepFace

Convolutional neural networks were the primary technical foundation for DeepFace. They are a class of deep learning models specifically found successful with image recognition tasks.

They are able to automatically learn hierarchical features of physical face from images and thus can identify faces much more precisely than traditional methods.

On the other hand, DeepFace incorporated several inventions that marked its superior position among others.

It used landmark detection to align the face and develop better tricks to treat the disturbances of lighting, angle, and pose.

Purpose and Goals Behind Creating DeepFace

The core objectives of developing DeepFace were to increase the accuracy of face recognition on Facebook, which would enable automatic identification of people in photos.

It was developed keeping in mind overcoming problems associated with the recognition of faces in different conditions, including the angle at which the face is presented, lighting and expression of the face.

This would limit the amount of effort made manually by users to identify people in photos and make Facebook a far more user-friendly platform.

DeepFace's Architecture and Technology

Explanation of the Neural Network Architecture Utilized in DeepFace

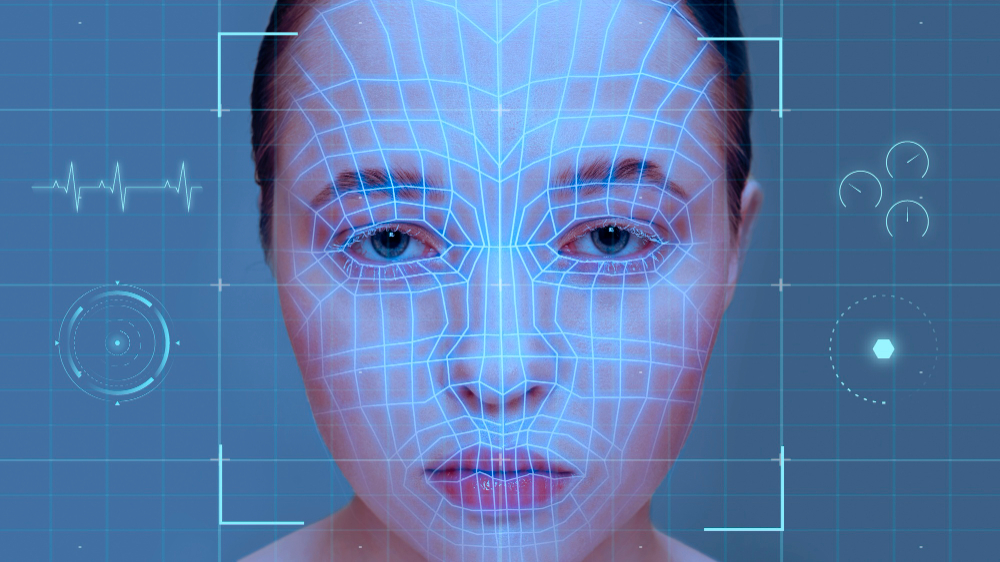

The DeepFace architecture was based on a deep convolutional neural network (CNN) designed with a specific structure for face recognition. It had multiple layers of neurons, where each learnt increasingly abstract features associated with faces.

Low-level networks learned edges and textures, while higher layer networks learned features such as nose shape, position of eyes, and overall structure.

The system utilized a 3D face model that aligns faces before processing, so it can handle variations of facial pose and alignment.

This was crucial in allowing the system to successfully recognize faces in real-world scenarios, where people often appeared in different orientations.

Innovations Developed: Deep Learning and CNNs

The innovation of CNNs was in the DeepFace application, separating it from the ordinary facial recognition systems.

CNNs automatically extract features from large datasets without any explicit engineering of those features; DeepFace gained the strength of learning and identifying faces, which positioned it among the most efficient facial recognition systems at the time.

DeepFace also made a significant innovation in using 3D facial models for alignment, recognition and facial attribute which allows the system to better cope with the variations of human beings in pose, face representation and angle so that faces can be easily recognized, even if they are not facing the camera directly.

Techniques for Landmark Detection and Facial Alignment

DeepFace used landmark detection on eyes, nose, and mouth to find facial points. After alignment with these facial points, DeepFace could standardize a face and achieve standard recognition.

This was essential where faces were taken in different orientations or lighting conditions because the system could map the alignments of the facial landmarks used to recognize the face properly.

How DeepFace Processes and Recognizes Faces

DeepFace: Detection of a face from an image followed by alignment through 3D modeling and landmark detection is first done and aligned facial features are fed into the deep learning network to extract high-level features for comparison against a database of known faces.

The system leads to an output either in identifying or verifying the same person, with the closest match.

Performance and Accuracy

DeepFace's Performance Compared to Those of Previous Facial Recognition Technologies

One of the key strengths of DeepFace was accuracy.

All other facial recognition systems had, till its inception, been achieving about 80-90% accuracy. But DeepFace had set a benchmark with 97.25% accuracy that put it as a very strong variation from its predecessors.

The fact that this happened with considerable difficulties in terms of angle, lighting, and facial changes makes it a sensational performance.

Key Metrics (Accuracy Rates, Error Rates) Achieved by DeepFace

DeepFace approached near-human levels of accuracy when it came to face recognition.

In its pilot runs, the system produced a recognition accuracy of 97.25% on the Labeled Faces in the Wild dataset (LFW), a very standard benchmark in the facial recognition community.

This is one fantastic leap beyond earlier systems, which often struggled with the complexity of real-world conditions.

Examples of Large Datasets Used for Training DeepFace

DeepFace was trained using one of the largest datasets existing at that time, with millions of labeled face images out of Facebook's vast populace; the dataset was thus very large, along with varied lighting, facial expressions, and poses, which enabled the system to learn how to recognize faces in different conditions.

Applications of DeepFace

Social Media Apps (photo tagging on Facebook)

Run DeepFace was primarily employed on Facebook. This application could automatically label photos on the face book with names for people in the pictures due to the fact that the feature analyses faces on images uploaded by users.

This feature made automatic photo naming one of Facebook's most used functionalities and completely altered user behavior when they interacted with photos on the platform.

Effects on Security and Surveillance Systems

Another impact was in the security and surveillance world.

The accuracy the DeepFace system offered made DeepFace a resource that had long been prized by law enforcement agencies and security forces to identify a person among a crowd or track surveillance footage.

Miscellaneous Applications (e.g., Retail, Law Enforcement)

Aside from the social media applications, DeepFace was also considered by retail establishments that use face recognition in identifying customers and servicing them according to their needs; and law enforcement agents who used the said technology to track criminals or locate missing persons.

Ethical Considerations and Privacy Concerns

Discussion of the Ethical Implications of Facial Recognition Technology

As with all advanced technologies, it is accompanied by several ethical issues.

In the case of the widespread utilization of DeepFace facial recognition systems, some of the most significant issues lie in the surveillance and invasion of private life.

There is also just a few lines of widespread concern about the possibilities of surveillance on private individuals, without their consent private or commercial context, that would be harmful in terms of privacy loss.

Surveillance, Privacy, and Data Security Concerns

The controversy that has risen over the use of facial recognition systems in public spaces has to do with surveillance.

On one hand, while a program like DeepFace might help establish mass surveillance networks in which people's movement is tracked unobstructed and without their knowledge, on the other hand, data security is another issue because personal data upsurge related to facial and numerous face recognition applications tends to increase the breaches of data as well as their misuse.

Many countries have implemented laws to regulate the use of facial recognition technology in response to these fears.

Some cities, such as San Francisco and London, have banned it from being used in public places. Simultaneously, people are debating the idea of balance between security,

Limitations in Face Identification (Bias and Fairness Issues)

The biggest challenge faced by a DeepFace and other largest facial dataset systems relates to the problem of bias.

Many studies concluded that in some cases, facial images systems may not perform as well in identifying faces of some individuals belonging to darker skin tones, women, or underrepresented groups.

This, therefore, triggered much of the concerns about fairness in the system.

Though DeepFace was impressively accurate, it still suffered from challenges in real-time recognition across complex environments.

Poor lighting, occlusions such as glasses or hats, and extreme facial expressions still confound the functioning of the system.

Continued Need for Enhancing Accuracy and Reliability

Like any AI technology, it needs constant updating to be the true and up-to-date realization of its concept and approach for an accurate and reliable face recognition model.

Most are still in development- new methods of how to handle issues with bias, real-time processing, and adaptability to varying environments.

The Future of Facial Recognition Technology

Predictions for Advancements in Facial Recognition Post-DeepFace

The future of face detection and recognition technology seems promising. With advances in deep learning and AI, it promises even greater improvements for performance.

FACE is really over as soon as DeepFace was published. The face recognition models and systems will likely become even more accurate, reliable, and adaptable to real-world conditions after DeepFace.

The Application of AI and Machine Learning in the Advancement of Recognition Systems

AI and machine learning will be integral components in the further capability development of deep face recognition systems.

Greater datasets, better algorithms, and the resolution of issues such as biasing could make the next generation of systems more inclusive and accurate.

Future Trends and Innovations to Consider in the Industry

Future breakthroughs in face recognition model would emerge from new management of environmental factors, such as lighting and angle, with improvement in real-time processing.

Future gains would also feature privacy-aware solutions such as on-device, lightweight face recognition and detection and recognition via edge computing if concerns over security of data rise.

In summary, what we think

DeepFace would be the very first monumental step in the face recognition horizon in terms of accuracy and performance which will establish a new standard for the entire industry.

DeepFace transformed not only social media facial recognition systems but also many other sectors by using deep learning techniques and convolutional neural networks.

Facial recognition development should continue but must navigate the ethical, privacy, and fairness issues involved.

As all these common stages and disciplines mature, it will incorporate AI and machine learning to further perfect and extend applications of facial recognition technology into an increasingly integral part of our technological landscape.