Introduction to Large Language Models

In today's artificial intelligence, large language models are an essential component, especially concerning NLP.

These large language models have, in fact, turned out to be very powerful tools for performing a wide variety of activities, which range from text generation and language translation to even code generation, since they are built to comprehend, interpret, and generate human language.

Large language models work by processing massive volumes of textual data using a transformer architecture to achieve goals related to language.

Their further development has resulted in revolutionary AI, whereby text generation similar to that by a human, response to queries, and assistance in various technical and non-technical jobs are facilitated.

It is crucial to maintain large language models effectively to ensure that they operate efficiently and continue to improve over time.

Some of the most popular large language models, known as large language models (LLMs), have shown why large language models are important in today's world.

These very large models are often referred to as large scale models, emphasizing their size and the amount of data they process.

What is Large Language Model (LLM)?

A large language model is a specific AI model fitted for tasks related to human language.

Because LLMs are constructed with deep learning techniques and pre-trained on big datasets due to their nature, they learn the pattern of natural language, while traditional machine learning models do not.

These models leverage the transformer architecture to process the input text-a framework that popularized the usage of self-attention.

The self-attention will let the model focus on various phrases and paragraphs so that its responses turn out to be contextually appropriate.

Large language models have turned important because they can carry out many diverse tasks without the need for individual programming.

For instance, they can write code, translate languages, and even generate content by understanding natural language.

Fine-tuning of these models is very important because it enables them to be specialized on specific tasks or domains, such as the generation of medical texts or the processing of legal documents.

What separates them from traditional machine learning models is that they can adapt.

How Large Language Models Work?

Large language models use deep learning algorithms.

One of them is a so-called transformer model, which was first reported in 2017.

The transformer model digests textual data through a set of fully connected layers combined with an attention mechanism.

Its self-attention mechanism is the key novelty of the transformer; that is, it allows the model to estimate the relative importance of various words included in the sentence or paragraph against others.

Thanks to this fact, the model generates text that is logical, true to reality, and always keeps track of the context and understanding of word dependencies.

Large language models are normally trained by being fed with enormous datasets of millions, or even billions, of text samples.

Conversely, the syntax, meaning, and patterns of the language are gradually picked up by the model.

Special task of large language model

In turn, to specialize in specific tasks, these can be fine-tuned provided pre-training has taken place on general data; further training on domain-specific datasets refines it even more.

The current state of large language models is such that they have gained many uses for sentiment analysis, question answering, and language generation.

One of the big maintenance challenges of large language models is in their computational complexity; such models are workable and trainable to a degree because they have huge amounts of computational power, distributed software systems, and significant technical knowledge.

But for this very reason, LLMs fall into a prime position in the AI landscape due to their advantages across a wide range of industries.

Language Model and Its Role in AI

A language model is a type of machine learning model that predicts the probability of a certain order of words.

To put it simply, it's designed to comprehend and generate human language. Language models are very important in a lot of NLP tasks: text generation, machine translation, summarization.

The area has completely changed with modern language models that represent state-of-the-art approaches to handle text data.

They include a special class of model, called the generative pre-trained transformer that is unsupervised for generating text similar to that of humans.

These models are powerful and flexible, and work well, since they have been pre-trained on large datasets and fine-tuned for tasks at hand.

These are foundational models in the greater scheme of things, on which many other tasks are built.

The broad applications include sentiment analyses, virtual assistants, and systems that need to generate or understand natural languages.

The ability of the language model to scale and respond with context has created an important cornerstone for the development of AI systems.

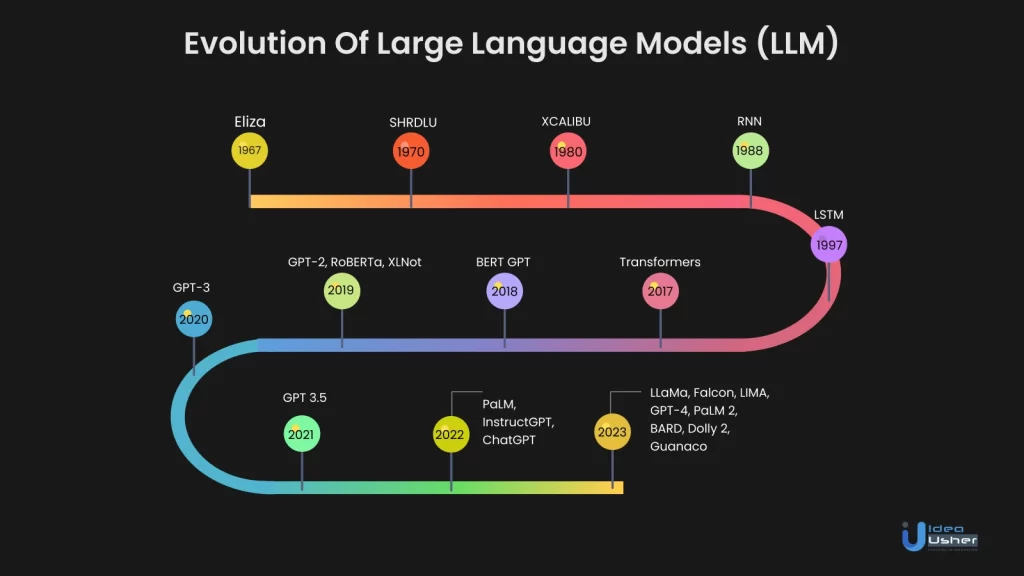

Evolution of Language Models![]()

Starting from simple models, such as n-grams, this field has grown into complex deep learning models, such as transformers.

Traditional models were lacking the capability to capture long-range dependencies in the text, and used to make predictions about sequences of words based on statistical techniques.

Then came transformer models and deep learning techniques, and language modeling moved a step ahead.

Transformer models work by attending to different parts of the input text all at once, thanks to a mechanism called self-attention.

This is particularly useful since, with tasks like text generation and machine translation, the model needs to keep context-even over long sentences or paragraphs.

The other transformer-based model that really improved language model performance on question-answering tasks or for sentiment analysis is Bidirectional Encoder Representations from Transformers (BERT).

Training Large Language Models llms

Large language models require a lot of computational resources, let alone such an elaborate training process.

This involves the supply of enormous datasets to the model with as diverse text material as technical documents, books, and articles apart from web content.

A model increases its capability to understand and generate natural language as it is fed with more and more training data.

This is also a reason why very large models, which have been trained on billions of parameters, have topped in performance in NLP.

Through training, LLMs learn word relationships and patterns that enable them to generate text contextually appropriate and coherent.

Fine-tuning makes the model even better at those tasks. For instance, models can be optimised for writing software code, translating languages, and code generation.

Fine-tuning, therefore, becomes necessary if performance for domain-specific tasks is to be achieved for the model, so that it ensures the generated output meets the quality standards required.

First is a retrieval-augmented generation approach wherein, during the generation process itself, the model retrieves information from outside sources that may be relevant.

Such a procedure would ensure that generated content is more accurate and relevant, therefore increasing the might of large language models through question answering and summarization tasks.

Large Language Models Applications

Large language models have been used in a number of different industries for various purposes. The most recent and also some of the most well-known uses are:

1. Text Generation:

With the capability of LLMs to generate text similar to those of humans, they are useful when developing content, reports, or even poetry. LLMs are very beneficial to developers since they also provide some degree of help with software code generation.

2. Language Translation:

Large language models have the added advantage of understanding and translating languages. They find their applications in programs like Google Translate and other translation software to provide exact and contextually appropriate translations.

3. Virtual Assistants:

LLMs power virtual assistants like Siri and Alexa to understand users' queries and respond to them. Such assistants depend on the capability of the model to generate text that gives a response relevant to the given context.

4. Question Answering:

When a system needs to answer questions based on textual information, LLMs find their perfect place in such applications. The ability to extract solutions from large data makes it valuable in support and customer service roles.

5. Sentiment Analysis:

The analysis of general sentiment is done by businesses through the use of LLMs in scanning social media posts, customer reviews, and other types of text data. After that, businesses base decisions on the information compiled on ways to increase the levels of customer satisfaction.

6. Software Writing:

With the help of AI, large language models are now capable of coding in a bunch of different programming languages. It speeds up the process by giving suggestions and auto-completion of functions.

7. Semantic search

An AI system that has been trained on a large volume of textual data to comprehend and produce human language is known as a large language model (LLM). In the context of semantic search, LLMs are highly effective as they can grasp the meaning behind queries rather than just matching keywords.

By examining the connections between words and sentences, they facilitate contextual understanding and produce more precise search results.

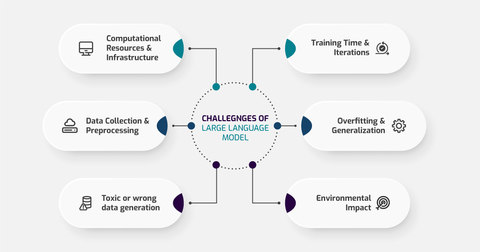

Challenges in Maintaining Large Language Models work![]()

While large language models have a host of advantages, they bring a number of drawbacks, especially in knowledge and computing power for keeping them running.

Training models on large data sets requires great quantities of computational power, distributed software systems, and high-performance hardware.

These models also use a great deal of energy, calling into question the environmental impact of training such large models.

Another challenge is ensuring the generated text is free from biases in the data used to train the model.

Because large-scale datasets will be used to train the LLM, there is a potential to accidentally pick up and propagate various social biases, which may have severe consequences.

To handle this issue, the training data needs to be selected very carefully and continuously observed during the deployment of the model.

Conclusion

Large language models have revolutionized the field of artificial intelligence in that strong tools are now available for generation and understanding of human languages.

The models will be able to carry out various tasks: from sentiment analyses, code generation, and language translation based on transformer architecture and deep learning techniques.

Despite the challenges associated with their training and maintenance, LLMs have steadily pushed the envelope of what was hitherto thought possible by artificial intelligence in NLP.

Large language models will find an increasingly wide range of applications as new research and innovative methodologies are developed, such as retrieval-augmented generation and fine-tuning; this underlines the importance of these models in AI research and practical applications.

Frequency asked questions (FAQs)

1. What is a large language model, and how does it work?

A large language model is an AI model designed to understand and generate human language. It uses deep learning techniques, particularly the transformer architecture, to process large amounts of textual data. These models work by training on massive data sets to learn patterns in natural language, which allows them to perform tasks like text generation, language translation, and question answering.

2. What is the difference between a language model and a large language model?

A language model predicts word sequences based on previous inputs, while a large language model is a more advanced version that leverages transformer models and deep learning algorithms to handle complex tasks like code generation, sentiment analysis, and producing contextually relevant responses in multiple languages.

3. How are large language models trained, and what is fine-tuning?

The training process for large language models involves exposing them to vast amounts of training data to learn linguistic patterns. Fine-tuning refers to the process of further training these pre-trained models on domain-specific tasks to improve their performance for particular applications like writing software code, language generation, or sentiment analysis.

4. What are the common applications of large language models in natural language processing?

Common applications of large language models include text generation, language translation, virtual assistants, question answering, sentiment analysis, and code generation. These models also support in-context learning for more accurate responses in diverse tasks.

5. What challenges exist in training and maintaining large language models?

Training models like large language models requires extensive computational resources, deep learning expertise, and access to distributed software systems. Additionally, addressing issues like bias in training data and the environmental impact of training very large models remains a significant challenge. Maintaining large language models also involves ensuring model accuracy, scalability, and handling specific tasks such as language-related tasks and technical expertis

tart writing here...