Deep learning has indeed transformed the domain of computer vision and opened avenues for researches into various aspects of image classification and object detection, among others.

The ResNet family of models is amongst the most important contributors to this revolution, and ResNet50 itself is one of the most widely implemented.

In this article, we’ll explore The purpose of this paper is to provide an in-depth study of ResNet50, covering architecture, characteristics, applications, and its significance in deep-learning.

1. Introduction to ResNet

What is ResNet (Residual Network)?

ResNet, an acronym for Residual Network, is a deep learning architecture developed to tackle the problems in training very deep neural networks.

It was first proposed by researchers from Microsoft Research, who introduced the idea of residual learning that empowered models to learn as depth increased..

https://images.app.goo.gl/exhtJNgffsfQF5Ws5

Origin and Development of ResNet

ResNet was first presented in the paper Deep Residual Learning for Image Recognition during the 2015 Conference on Computer Vision and Pattern Recognition (CVPR).

The authors, Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun, came up with a new approach to deal with the problem of vanishing gradients, which had hindered the training of deep neural networks.

Role of Deep Neural Networks in Computer Vision

Deep neural networks have greatly increased the accuracy and performance for many computer vision tasks.

However, such training of very residual neural network results in problems like vanishing gradients and model degradation; deeper models perform worse than shallower ones.

The architecture of ResNet defeats all these challenges and allows training with hundreds or even thousands of layers.

Problems solved by ResNet

ResNet particularly deals with the vanishing gradient problem, which is achieved by incorporating residual connections.

The residual connections allow the gradients to pass directly through the network, making the training of deep models relatively easier and thus achieving superior performance.

2. What is ResNet50?

ResNet50 Definition

ResNet50 is a particular configuration of the ResNet architecture with 50 layers.

It is a powerful convolutional neural network (CNN) in image recognition, object detection, and feature extraction.

Meaning of 50 in ResNet50

The 50 in ResNet50 denotes the plain network of the network.

These layers are convolutional, pooling, fully connected layers along with residual blocks that make use of skip connections.

Applications and Popularity of ResNet50

ResNet50 is extensively used due to its excellent accuracy and flexibility.

The model has been successfully used for a variety of computer vision applications such as medical image analysis, autonomous driving, and facial recognition.

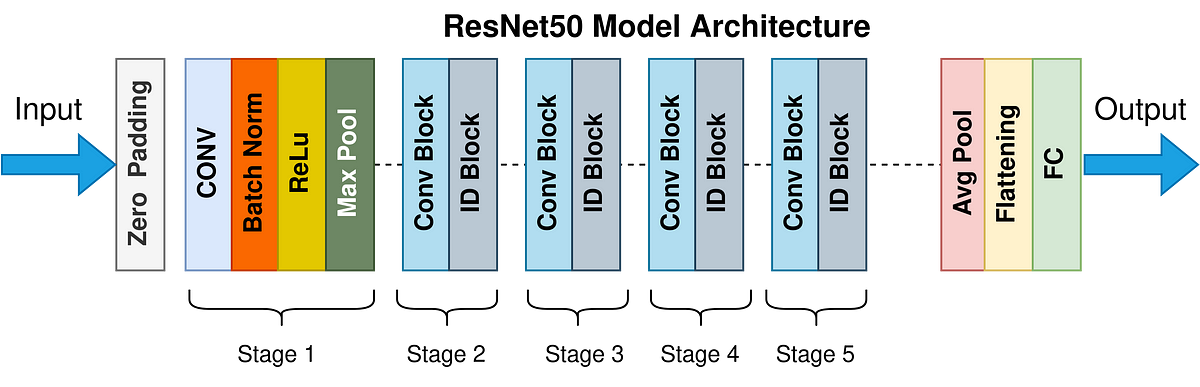

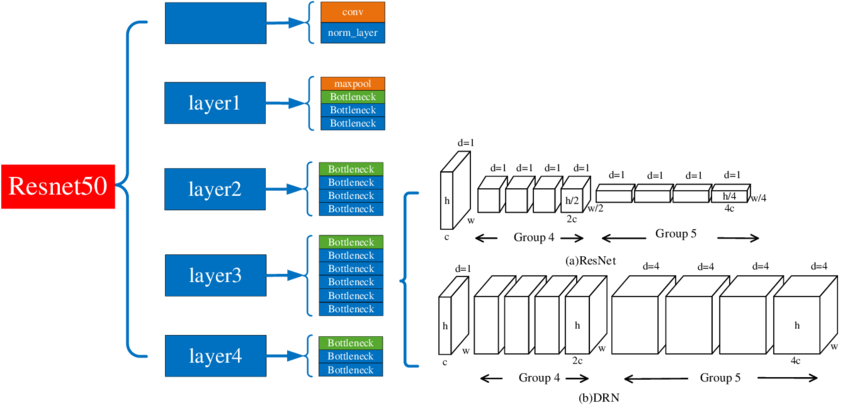

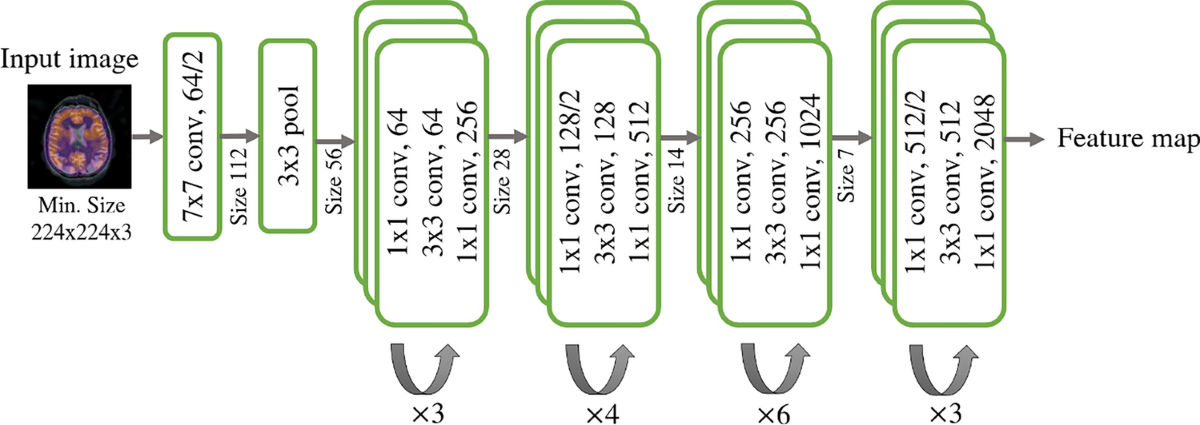

3. ResNet50 Architecture

Overview of ResNet50’s Layer Structure

ResNet50 architecture is essentially based on the residual block concept that makes input and output dimensions amenable to training. It consists of:

1.Input Layer : It accepts an image as input.

2.Convolutional Layers: Extract features from the input image.

3.Bottleneck Design: Each residual block contains three layers,

- a 1x1 convolution,

- a 3x3 convolution,

- a 1x1 convolution.

4.Identity and Residual Connections : Enable gradients to flow through the network.

5.Pooling Layers : Reduce the spatial dimensions of the feature maps.

6.Fully Connected Layers: Perform the final classification.

7.Output Layer: Outputs the class probabilities.

Number of Layers and Parameter Distribution

ResNet50 is composed of millions of parameters distributed throughout the millions of convolutional and fully connected

layers; the parameters are updated throughout training to minimize a certain loss function.

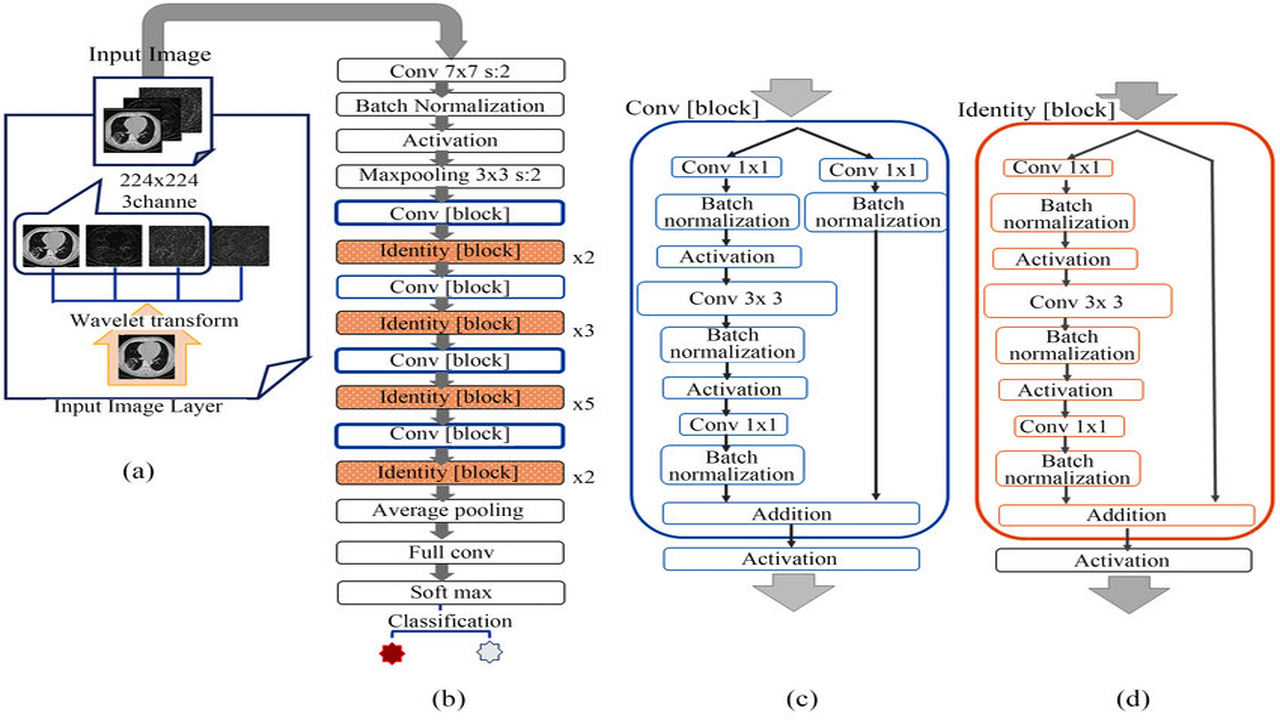

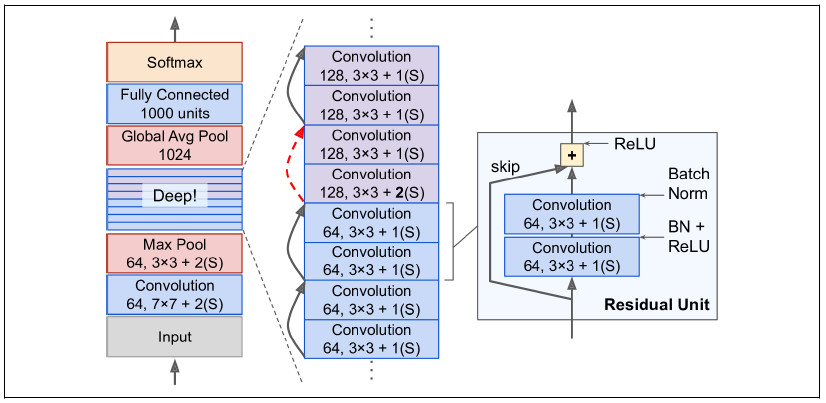

Skip Connections and Residual Blocks

Residual blocks incorporate multiple convolutional layers using an additive shortcut or skip connection:

That is, the result at the beginning of each layer is added to the end-of-layer output, providing shortcuts through the layers so as to prevent vanishing gradient.

4. Key Features of ResNet Models

Introduction to Residual Learning

Residual learning is what ResNet is based on.

The residual blocks learn the mapping F(x) = H(x) – x instead of learning the mapping H(x) .

This makes it easier for the network to optimize. Skip Connections and the Vanishing Gradient Problem

Skip connections allow gradients to pass directly through the network, preventing them from becoming too small as they propagate backward.

This innovation allows for the training of very deep networks.

Depth Scalability in ResNet Architectures

ResNet models come in different depths, such as

ResNet18,

ResNet34,

ResNet101,

ResNet152.

This scalability enables users to select a model based on their computational resources and task requirements.

5. The Contribution of ResNet to CNNs

ResNet in CNNs

ResNet is an extension of traditional CNNs with the inclusion of resnet architecture, which makes it possible to train deeper networks without the loss of performance.

ResNet vs. Traditional CNN

Compared to traditional CNN architectures, which suffer from the problem of vanishing gradients, ResNet offers better performance in terms of accuracy through the ability to train deep models.

Performance Improvement

ResNet50 has shown high performance in several applications:

- Image Classification: High performance on benchmarks like ImageNet.

- Object Detection: The core backbone for many frameworks including Faster R-CNN.

- Segmentation: It is the core to power models like Mask R-CNN.

6. What is the Main Focus of ResNet?

Challenges in Training Deep Networks

ResNet aims to eliminate vanishing gradients and overall degradation in performance due to depth in very deep networks.

Better Accuracy with Depth

In ResNet, this capability of enabling deeper model training enhances both the accuracy and generalization over complex datasets.

Practical Training of Deeper Models

It is made practical to train models with hundreds or thousands of layers in ResNet and thus unlock new possibilities of network architecture.

7. ResNet50 Layers in Detail

Input Preprocessing and Initial Convolution

ResNet50 starts by processing the input image. After that, there is an initial convolution layer with a kernel size of 7x7 and stride. Detailed Explanation of the 4 Main Stages

1. Conv1: Initial convolution and max-pooling.

2. Conv2_x : First set of residual blocks.

3. Conv3_x: Second set of residual blocks, with increased feature map size.

4. Conv4_x : Third set of residual blocks, further increasing feature map size.

Skip Connections in Each Stage

Skip connections ensure that the input of each residual block is added to its output, which helps in gradient flow and efficient training.

Batch Normalization and Activation Layers

Each convolutional layer is followed by batch normalization and a Relay activation function, which enhances stability and convergence during training.

8. Advantages and Limitations of ResNet50

Advantages

1. High Accuracy : It performs exceptionally well on benchmarks like ImageNet.

2. Effective Depth Usage : Residual connections are used to train deep networks.

3. Transfer Learning : Pre-trained ResNet50 models are widely used for transfer learning in various domains.

Limitations

1. Computational Costs : It requires a lot of computational resources for training and inference.

2. Training Complexities : Deeper ResNet variants are hard to train due to memory and time requirements.

9. Applications of ResNet50

Image Classification

ResNet50 has been applied very widely for classification tasks of images and yielded state-of-the-art accuracy for ImageNet.

Object Detection and Segmentation

ResNet50 acts as the back bone of the popular frameworks of training deep neural networks and segmentation like Faster R-CNN and Mask R-CNN.

Feature Extraction

It can be used as feature extractor due to its capabilities of extracting high-quality features in other network architecture models.

Applications

1. Medicine: Image diagnosis.

2. Self-Driving Cars: Recognize objects and scenes.

3. Robotics : Developing visual perception systems.

10. Variants and Extensions of ResNet

Variants of ResNet

1.ResNet18 and ResNet34 : Shallower versions for smaller dataset sizes.

2.ResNet101 and ResNet152: Deeper versions with better accuracy.

Extensions and Improvements

1.ResNeXt : Group convolutions are implemented for better performance.

2.SENet : An attention mechanism to improve feature representation.

Comparison with Other Architectures

ResNet50 offers higher accuracy than older architectures VGG and Inception.

This is the case despite having fewer layers and parameters, as well as lower depth and width factors.

In summary, what we think

ResNet50 has been a game-changer in deep learning, addressing critical challenges in training deep networks and achieving remarkable performance across various tasks.

Its innovative architecture has inspired numerous extensions and remains a cornerstone of modern computer vision.

As AI continues to evolve, ResNet50 and its variants will play a pivotal role in advancing research and applications in deep learning.

Frequently asked questions (FAQs)

1. What is ResNet50, and why is it important in deep neural networks?

ResNet50 is a family of deep convolutional neural networks. It consists of 50 layers. Actually, this network has really been very helpful in counteracting the problem of Vanishing Gradient in deep neural networks using what are known as residual connections to aid deeper architectures to be appropriately trained. This network primarily uses it for image classification tasks and computer vision applications.

2. How does ResNet50 solve the vanishing gradient problem in deep learning?

ResNet50 uses skip connections or shortcut connections that bypass one or more layers so that the residual functions are free to learn small refinements rather than the entire mapping. This is called an identity mapping, and it helps in improving the training process because the training error decreases and the vanishing gradient problem often present in deeper networks is alleviated.

3. What are the essential components of the ResNet50 architecture?

The ResNet50 architecture consists of:

- Residual blocks, consisting of convolutional layers, batch normalization, and activation functions.

- Max pooling layers and fully connected layers to reduce the spatial dimensions** and perform the final classification.

- Skip connections to ensure proper learning and gradient flow.

This architecture is optimized for tasks such as image recognition, object detection, and other computer vision applications.

4. How would one go about implementing ResNet50 for image classification tasks?

You could implement ResNet50 for image classification by using libraries such as Keras, where you would import layers and build up your network. The most common steps include:

1. Loading input data with appropriate input dimensions (in this case, 224x224 pixels).

2. Applying data augmentation for training on large datasets.

3. Using transfer learning by fine-tuning pre-trained ResNet50 models for your specific task.

4. Evaluating test accuracy on test samples to ensure robust performance.

5. What are the advantages of ResNet50 over traditional convolutional neural networks?

ResNet50 has the following advantages over traditional CNNs:

- Deep residual learning resolves the problem of vanishing gradients.

- With an increased number of layers, higher test accuracy is observed as compared to a plain network.

- Provides simplicity to train deep residual networks through the usage of shortcut connections.

- Shows excellent performance for feature extraction, mainly for image classification, computer vision.

- Offers better reduction of the training error without extra fine-tuning.